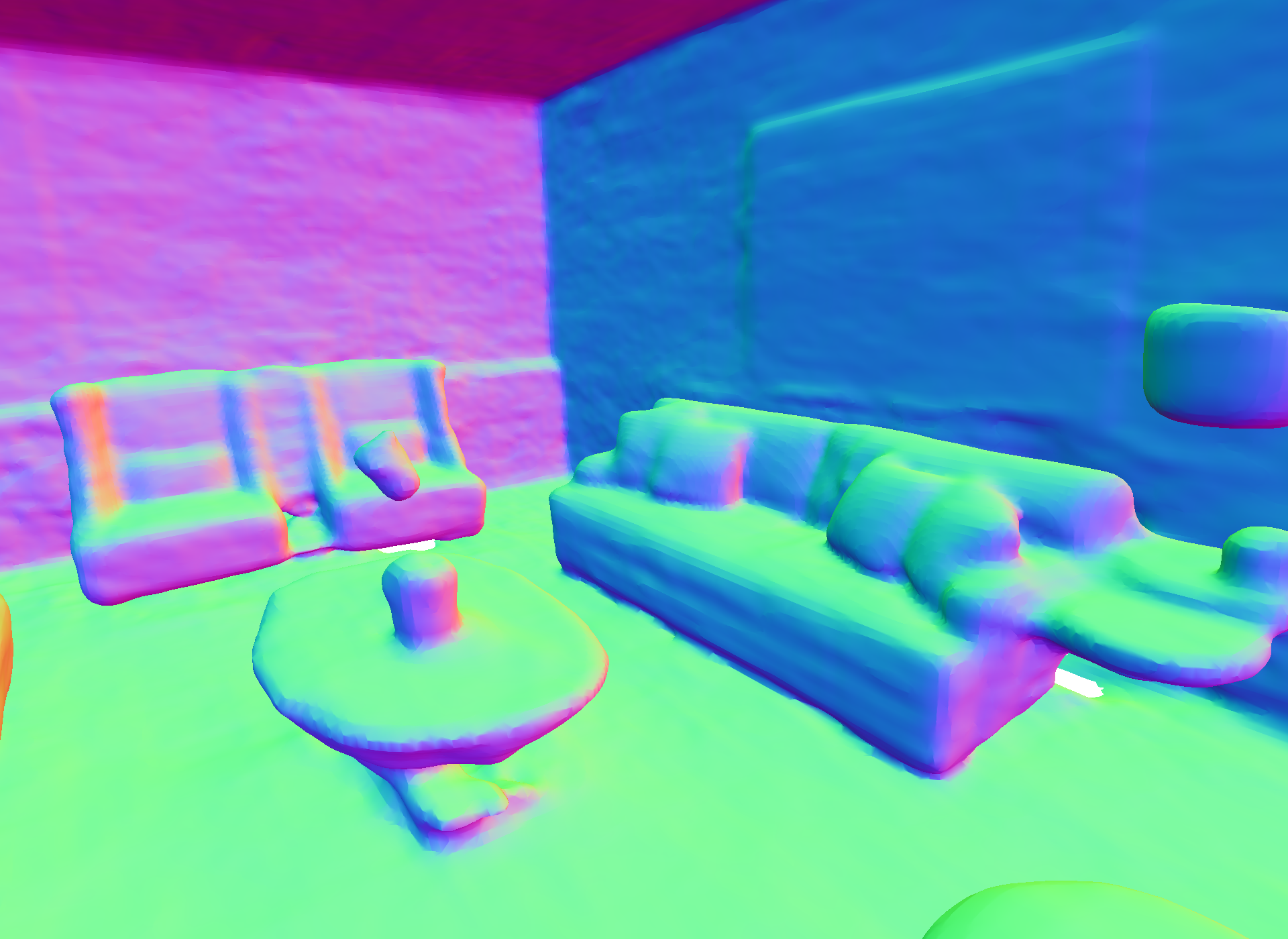

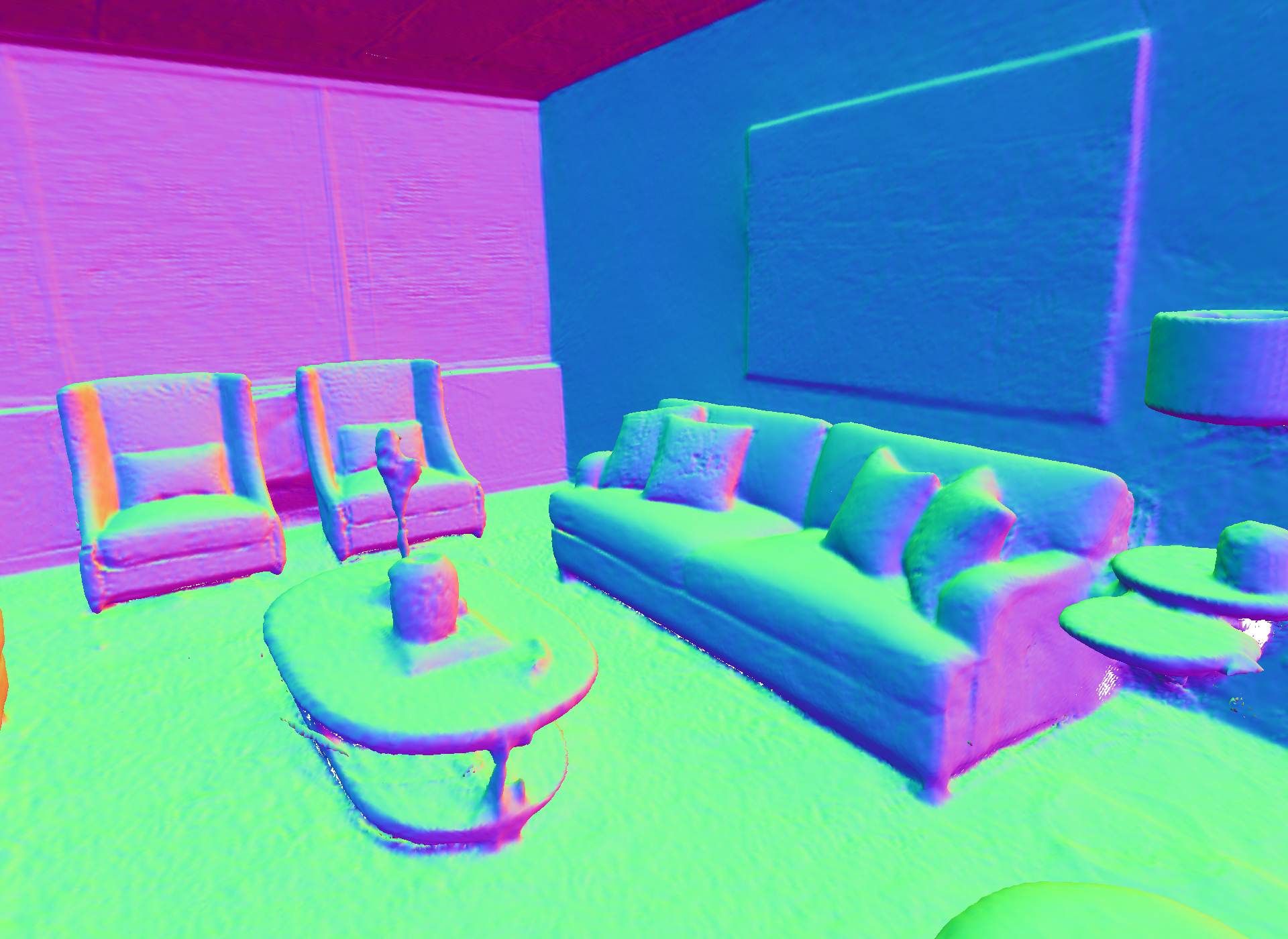

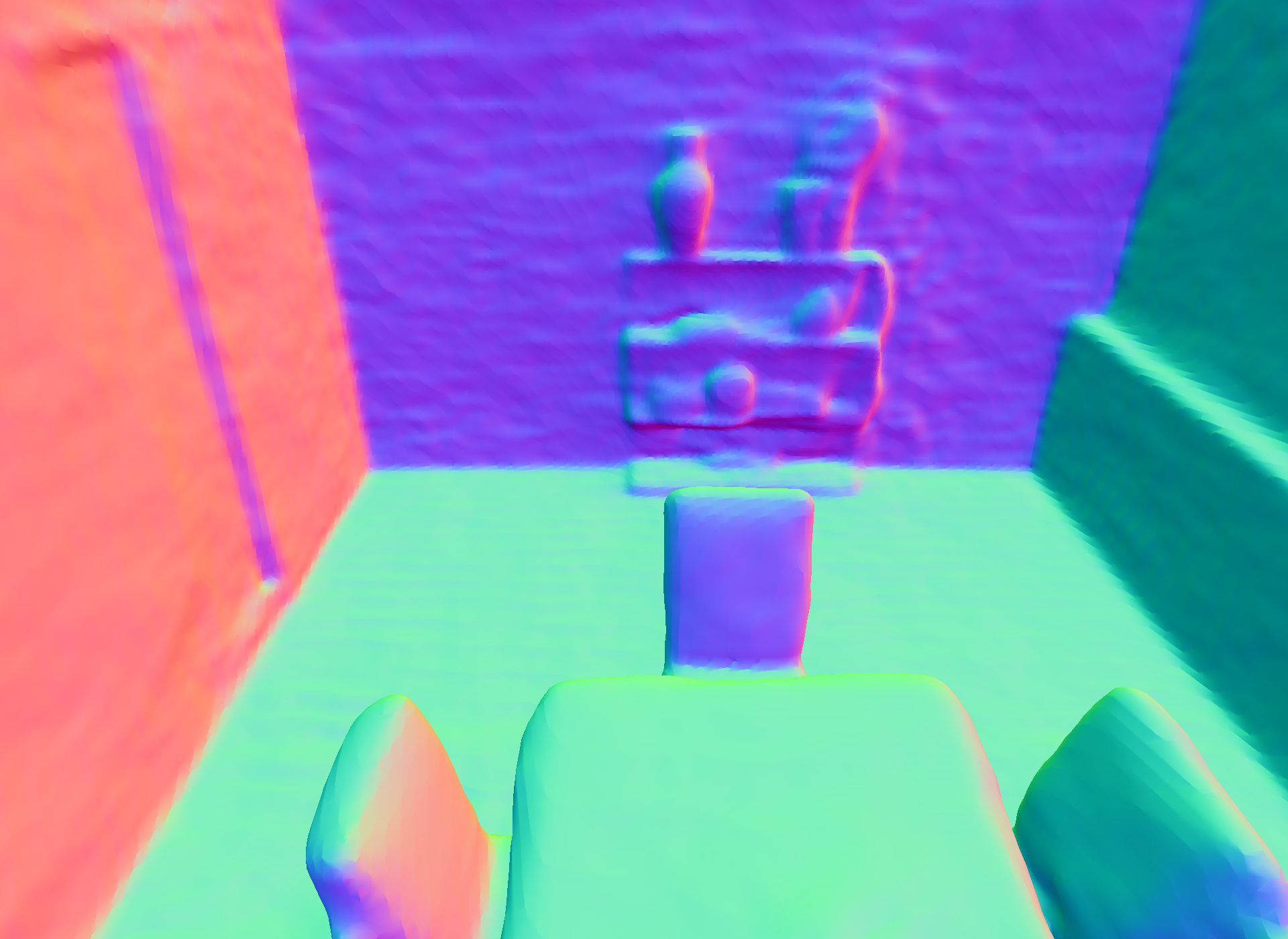

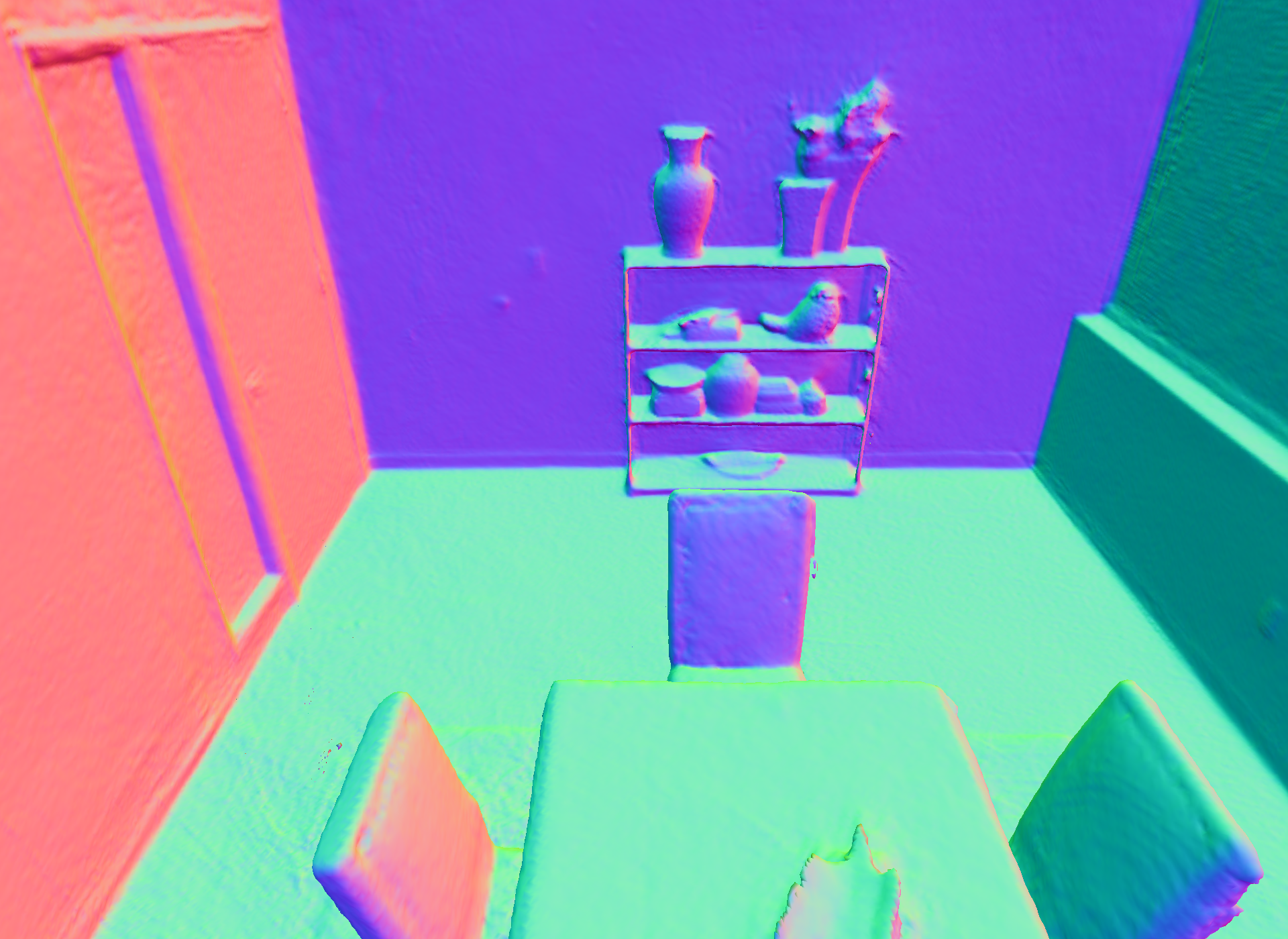

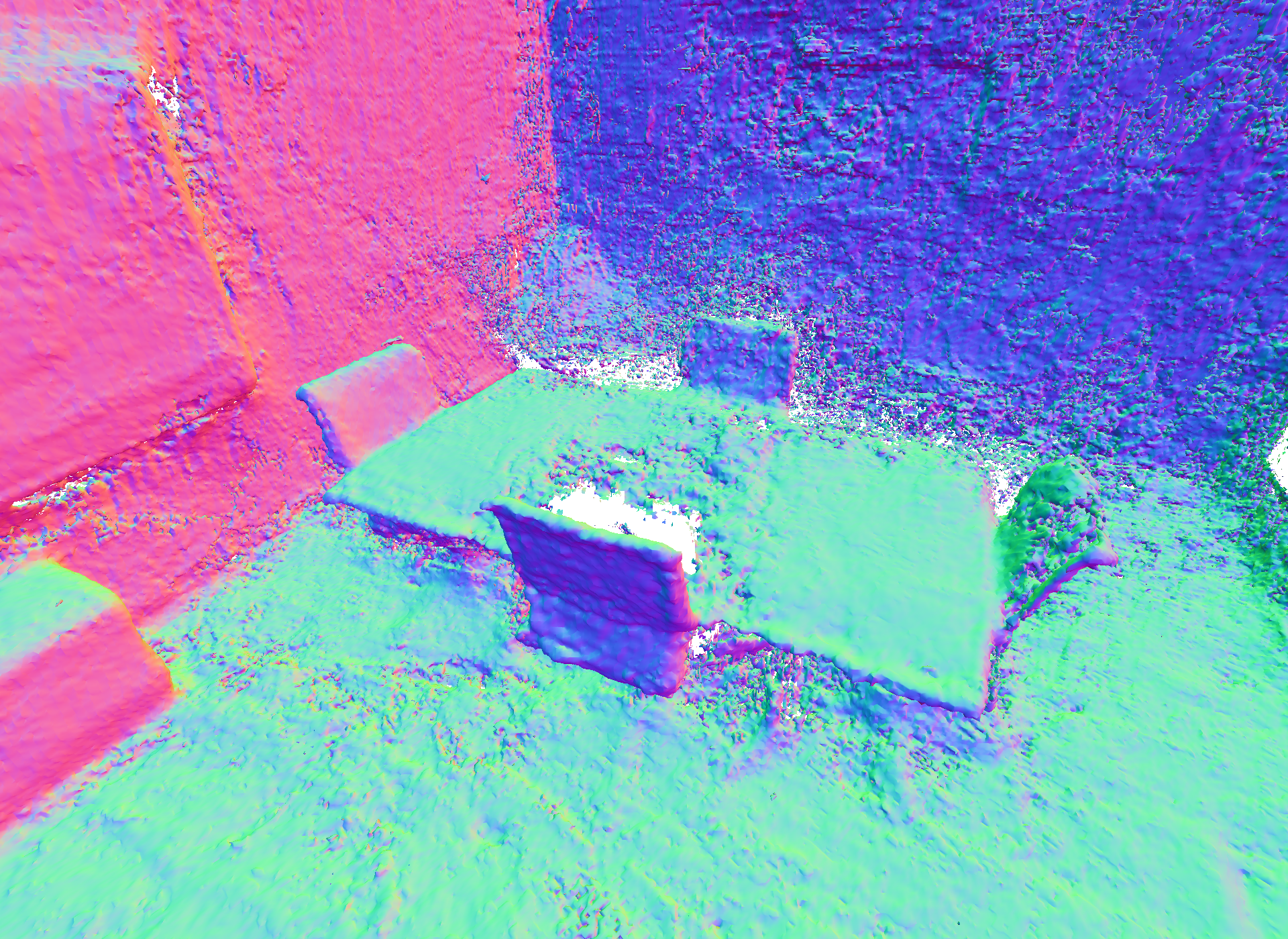

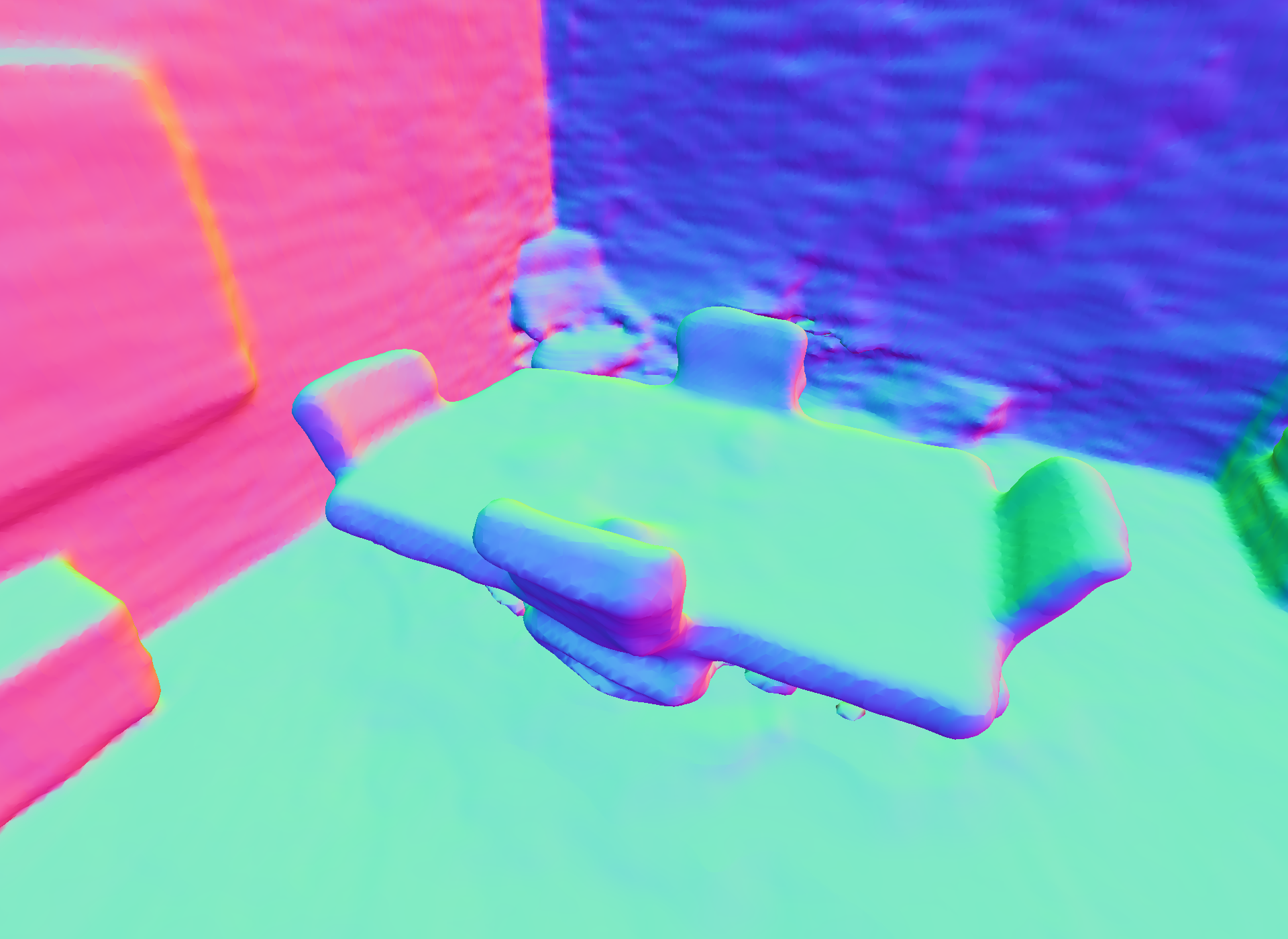

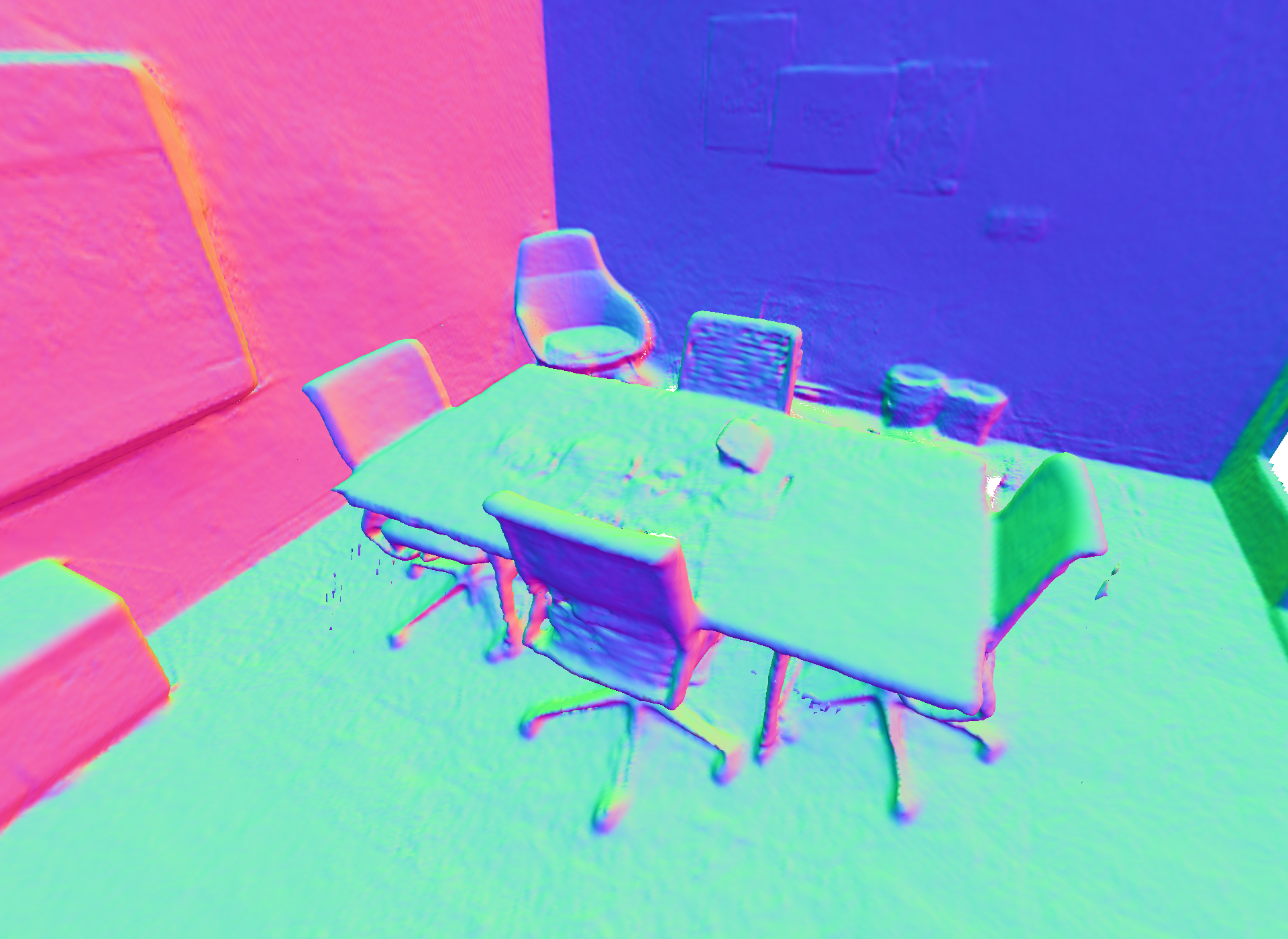

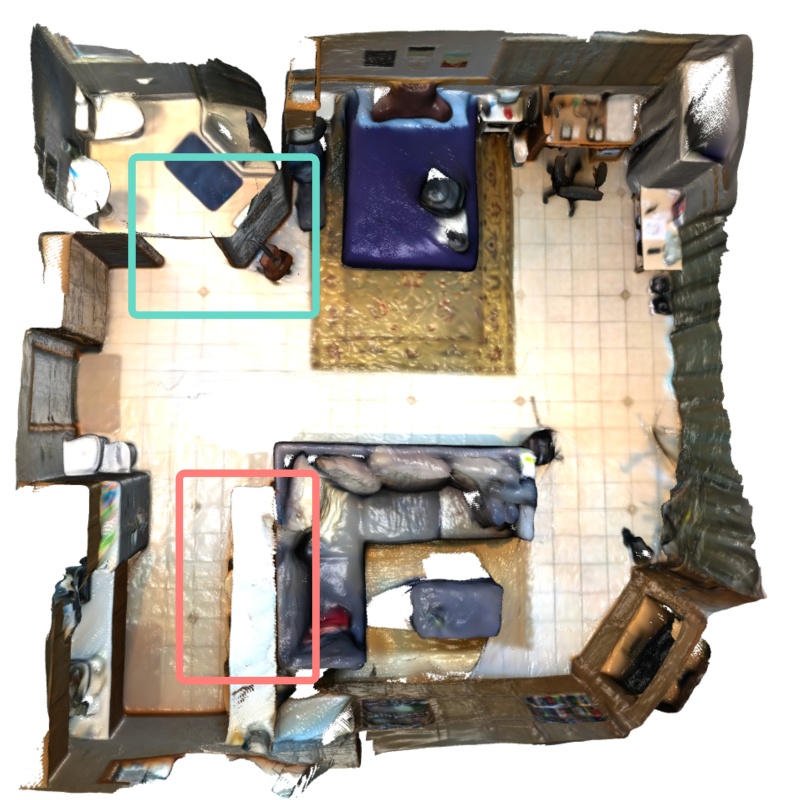

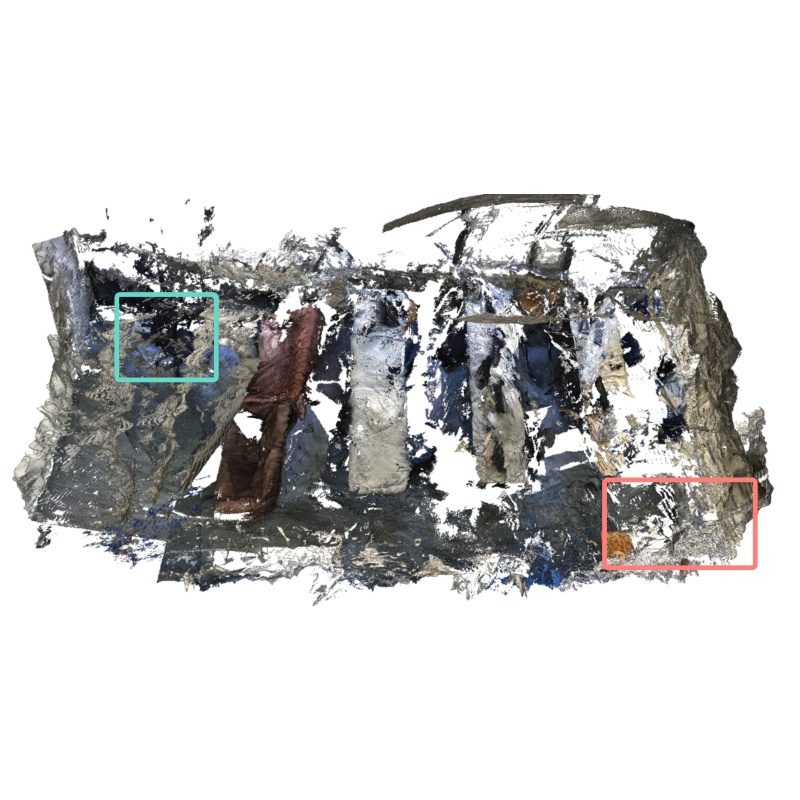

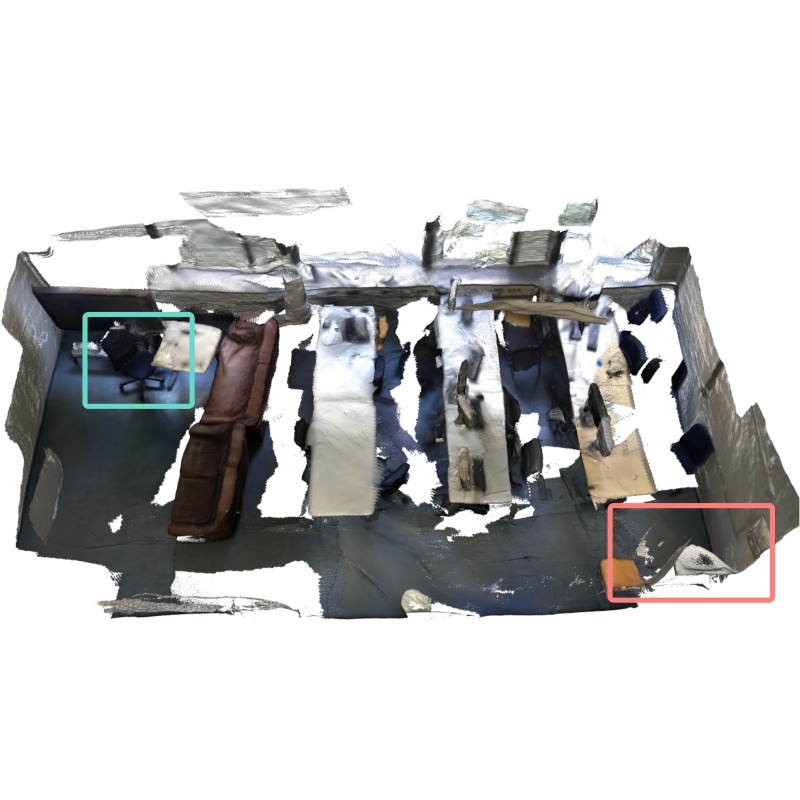

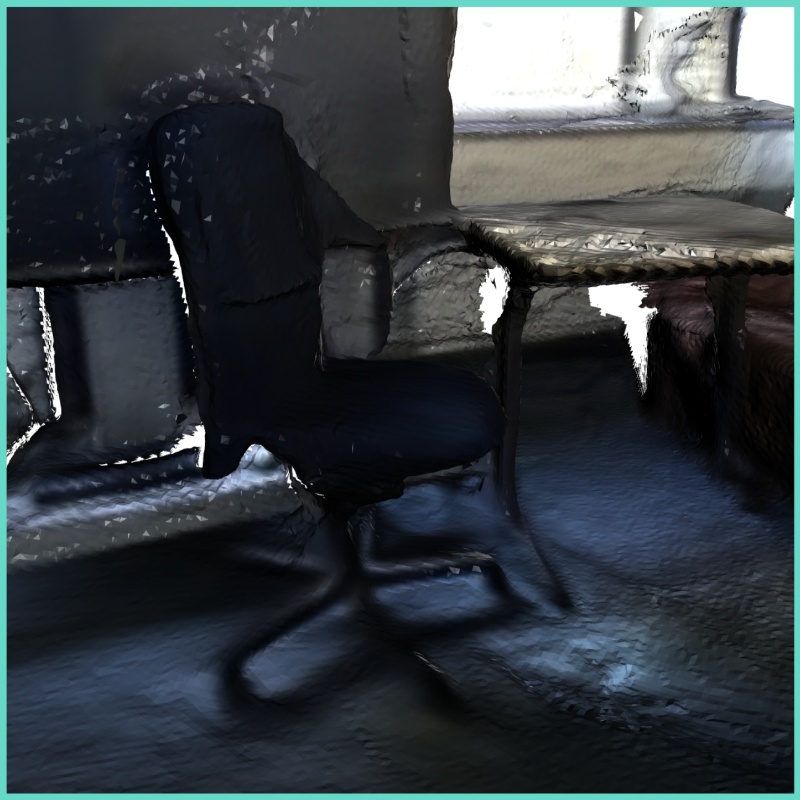

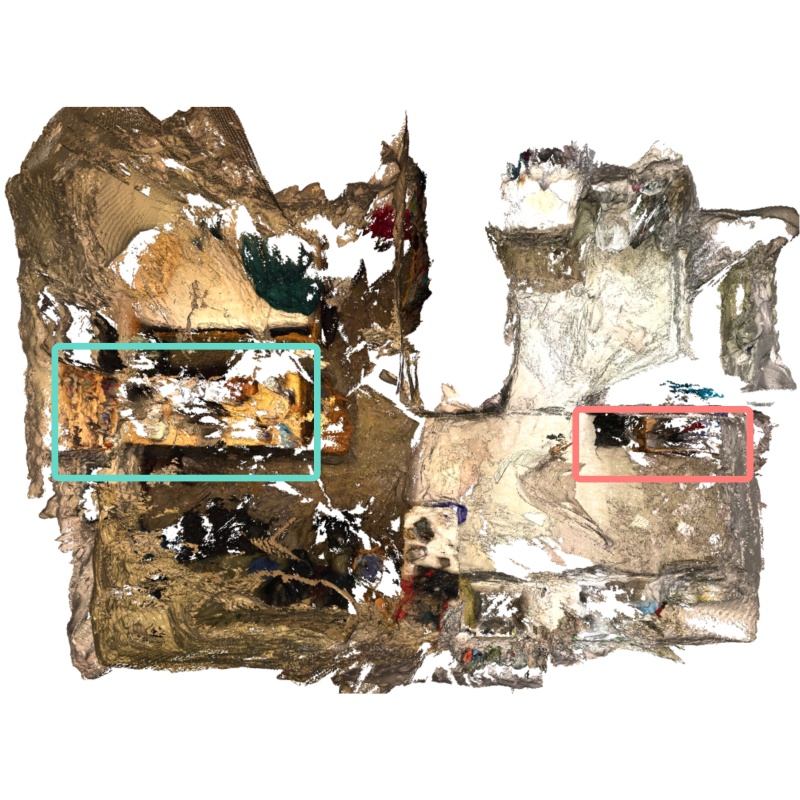

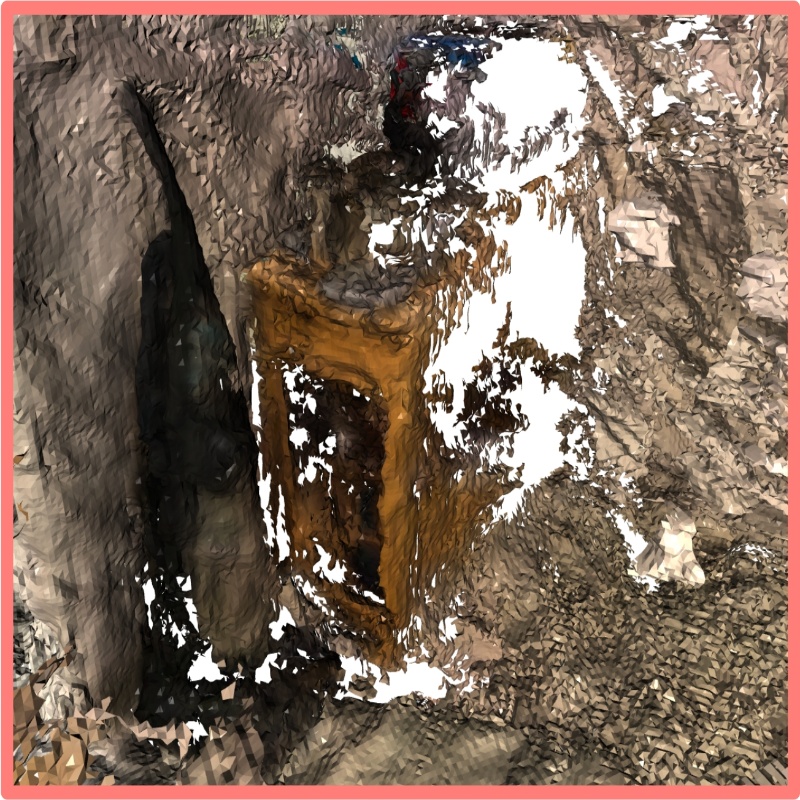

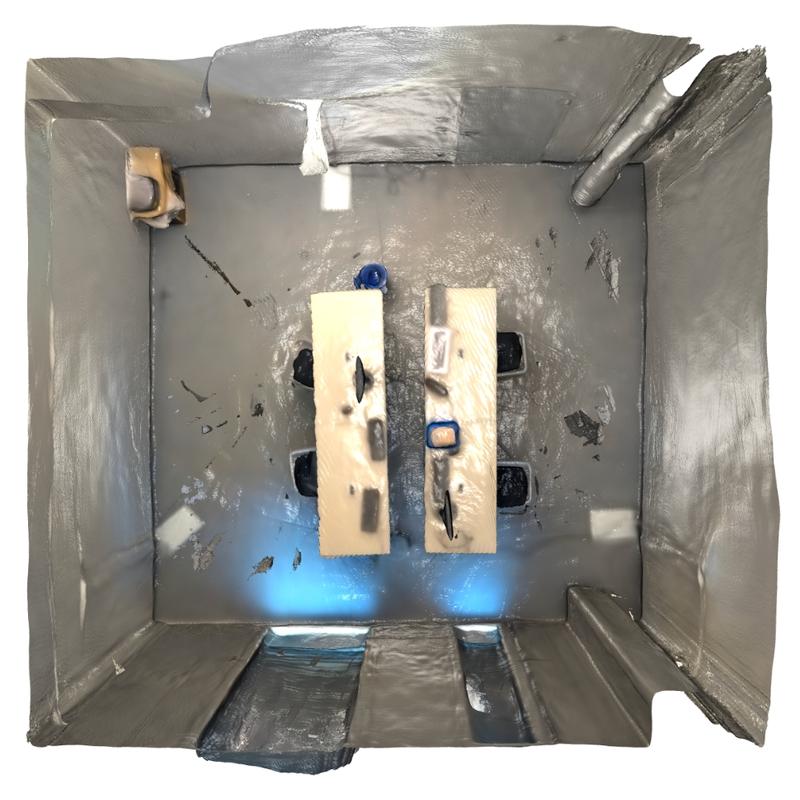

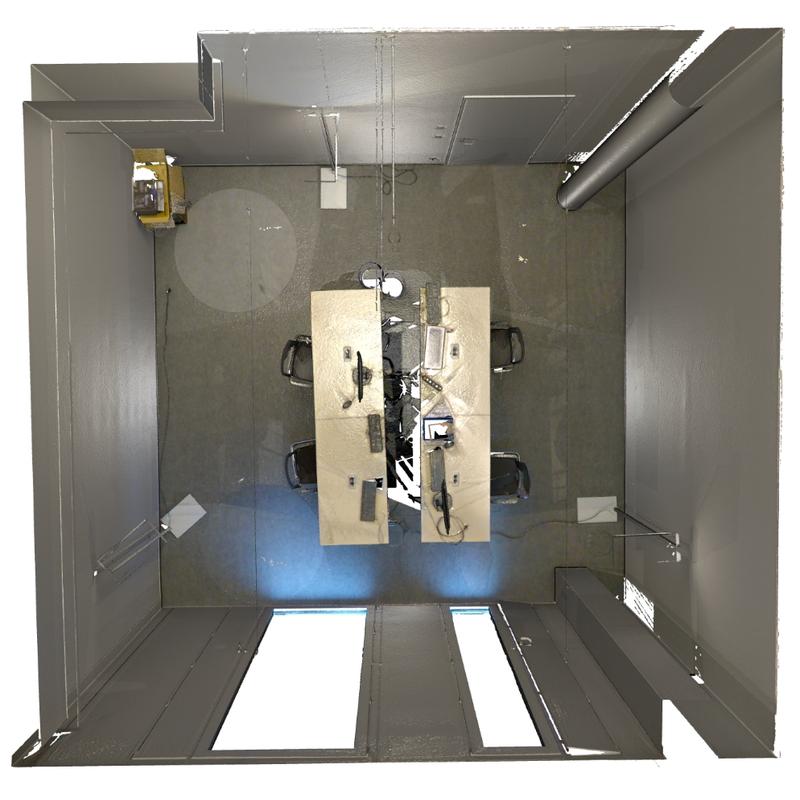

We present HI-SLAM2, a geometry-aware Gaussian SLAM system that achieves fast and accurate monocular scene reconstruction using only RGB input. Existing Neural SLAM or 3DGS-based SLAM methods often trade off between rendering quality and geometry accuracy, our research demonstrates that both can be achieved simultaneously with RGB input alone. The key idea of our approach is to enhance the ability for geometry estimation by combining easy-to-obtain monocular priors with learning-based dense SLAM, and then using 3D Gaussian splatting as our core map representation to efficiently model the scene. Upon loop closure, our method ensures on-the-fly global consistency through efficient pose graph bundle adjustment and instant map updates by explicitly deforming the 3D Gaussian units based on anchored keyframe updates. Furthermore, we introduce a grid-based scale alignment strategy to maintain improved scale consistency in prior depths for finer depth details. Through extensive experiments on Replica, ScanNet, and ScanNet++, we demonstrate significant improvements over existing Neural SLAM methods and even surpass RGB-D-based methods in both reconstruction and rendering quality.

System Overview: Our framework consists of four key stages: online camera tracking, online loop closing, online mapping, continuous mapping, and offline refinement. The camera tracking is performed using a recurrent-network-based approach to estimate camera poses and generate depth maps from RGB input. For 3D scene representation, we use 3DGS to model scene geometry, enabling efficient online map updates. These updates are integrated with pose graph BA for online loop closing, achieving both fast updates and high-quality rendering. In the offline refinement stage, camera poses and scene geometry undergo full BA, followed by joint optimization of Gaussian primitives and camera poses to further enhance global consistency.

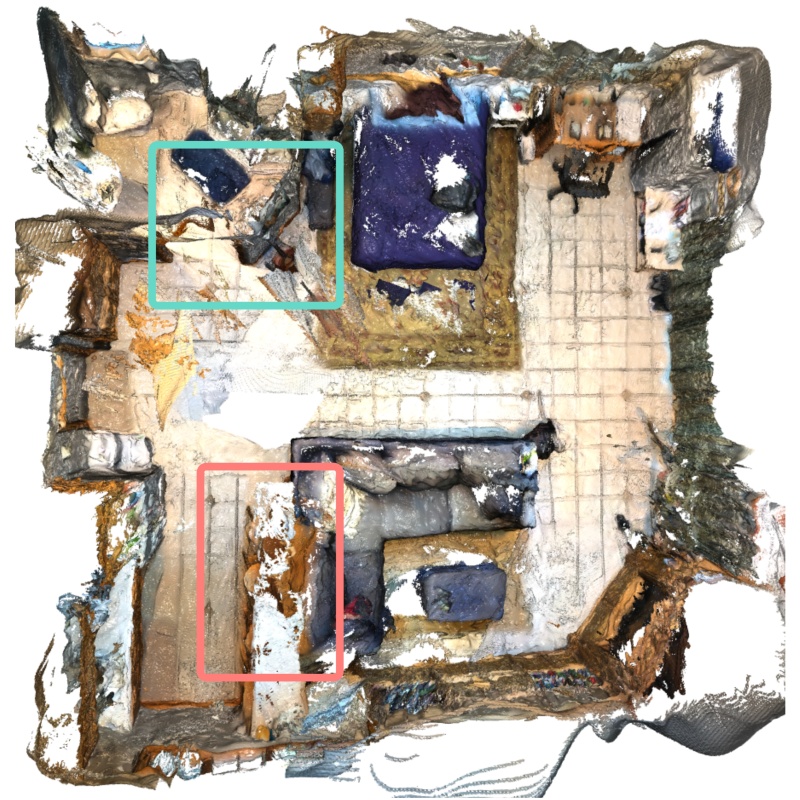

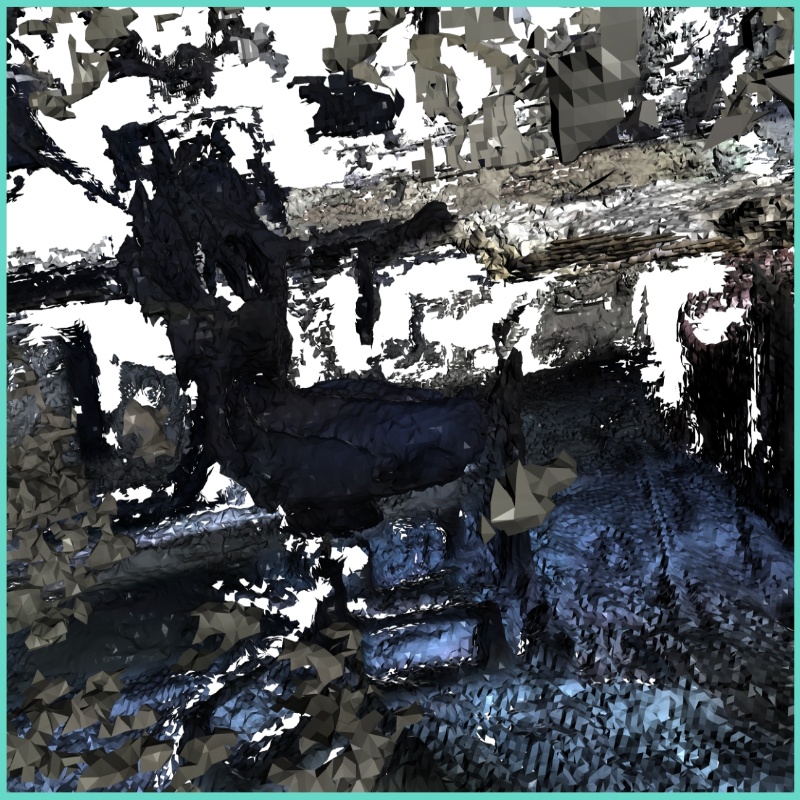

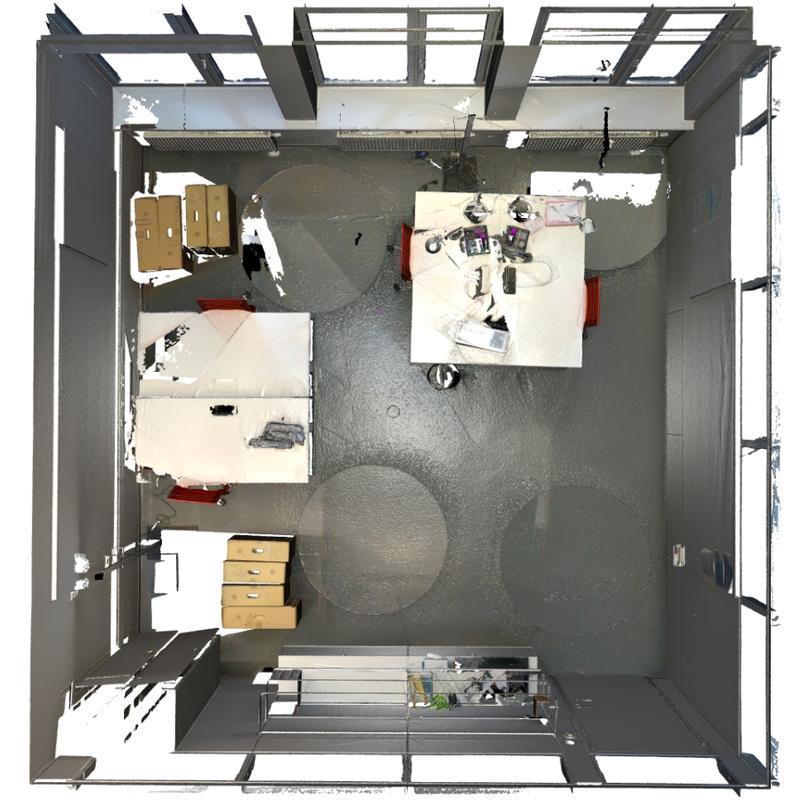

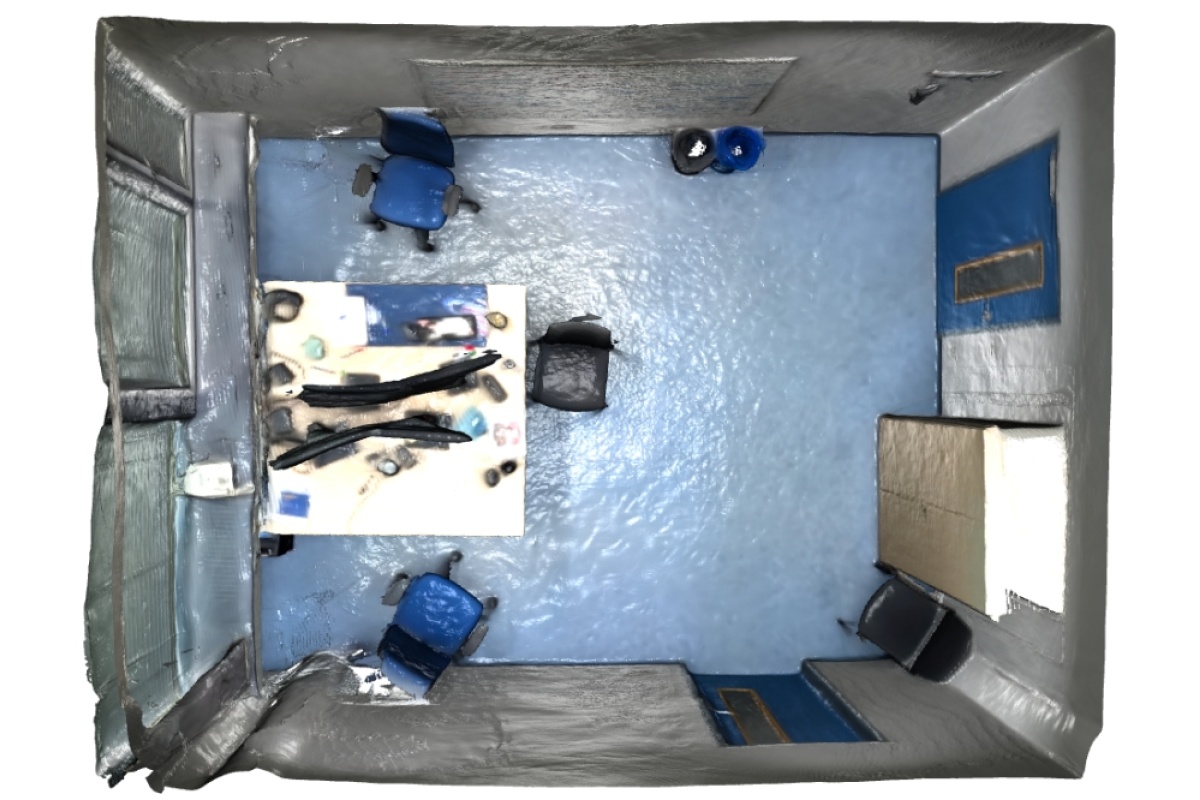

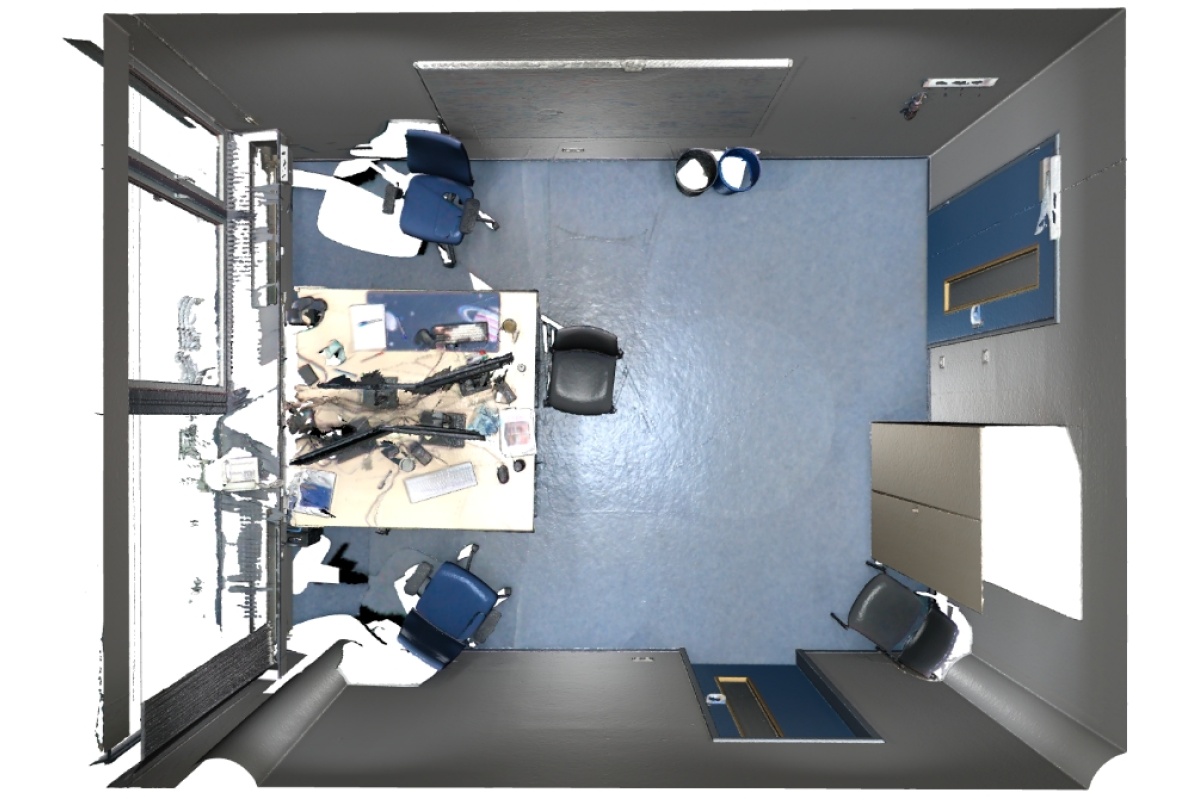

Semantic Reconstruction

Apply SpatialLM to our reconstructed mesh

@misc{zhang2024hislam2,

title={HI-SLAM2: Geometry-Aware Gaussian SLAM for Fast Monocular Scene Reconstruction},

author={Wei Zhang and Qing Cheng and David Skuddis and Niclas Zeller and Daniel Cremers and Norbert Haala},

year={2024},

eprint={2411.17982},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2411.17982},

}